What do I need to know about data quality?

Quality data is useful data. To be of high quality, data must be consistent and unambiguous.

Data quality issues frequently arise during database merges or when integrating systems and clouds. These issues typically occur when data fields that are supposed to be compatible have inconsistencies in their schemas or formats. Data that is not high quality can undergo data cleansing to raise its quality.

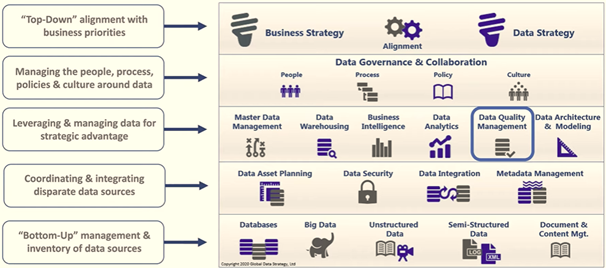

Data quality is a part of a wider Data Strategy

A successful Data strategy links business goals with Technology Solutions.

The Problem with Bad Data

Data quality is a challenge for many companies – and the problem is often worse than organizations realize. Wanting to work quickly to collect data and use it to optimize programs in near real-time, organizations may skip data quality assurance practices, such as establishing standards and criteria. This can easily lead to reliance on inaccurate, incomplete, or redundant data, creating a domino effect of decisions based on inaccurate numbers and metrics.

Additionally, because organizations are now working with massive sets of big data, many do not have the data science resources available in-house to sort and correlate this information. Without the proper tools and analysts to sort this data, organizations will miss out on time-sensitive optimizations.

According to one study, only 3 percent of executives surveyed had data records that fell within the acceptable range. Moreover, 65 percent of marketers are concerned with the quality of their data while 6 out of 10 marketers are listing improving data quality as a top priority.

Consider the following implications of bad data:

- High Costs: According to IBM, bad data quality was costing organizations $3.1 trillion. In fact, nearly 50 percent of newly acquired data have errors that could negatively impact the organization. Additionally, according to MIT, bad data can cost organizations as much as 25 percent of total revenue.

- Wrong Decisions: Basing business decisions on faulty or incomplete data can result in your team overlooking a critical piece of information. Consider this: The brand awareness your out-of-home ads are generating could be responsible for most conversions. However, if your company uses an incomplete attribution model, you may allocate funds to the wrong media vehicles, instead of the media that is driving the most results. This would ultimately lead to reduced ROI.

- Strained Customer Relationships: Bad data doesn’t just impact your advertising budgets – it can impact your customer relationships as well. If bad data leads you to target a customer with products and messaging that do not align with their interests and preferences, it can quickly sour them to the brand. This may cause them to opt-out.

What are the benefits of data quality?

Data is one of your company’s most important resources. Businesses lose up to 20% of revenue due to poor data quality practices. Our business intelligence is only as good as the data we feed it. We have to make sure our data analysis isn’t losing value because what’s feeding it is inaccurate.

When data is of excellent quality, it can be easily processed and analyzed, leading to insights that help the organization make better decisions. High-quality data is essential to cloud analytics, AI initiatives, business intelligence efforts, and other types of data analytics.

In addition to helping our organization extract more value from its data, the process of data quality management improves organizational efficiency and productivity, along with reducing the risks and costs associated with poor-quality data. Data quality is, in short, the foundation of the trusted data that drives digital transformation—and a strategic investment in data quality will pay off repeatedly, in multiple use cases, across the enterprise.

Data Quality vs Data Integrity

Data quality oversight is just one component of data integrity. Data integrity refers to the process of making data useful to the organization. The four main components of data integrity include:

- Data Integration: data from disparate sources must be seamlessly integrated.

- Data Quality: Data must be complete, unique, valid, timely, consistent, and accurate.

- Location Intelligence: Location insights add a layer of richness to data and make it more actionable.

- Data Enrichment: Data enrichment adds a more complete, contextualized view of data by adding data from external sources, such as customer data, business data, location data, etc

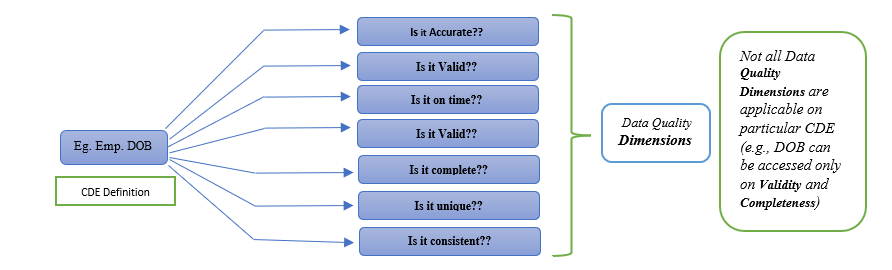

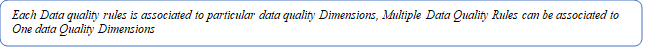

Data quality rule

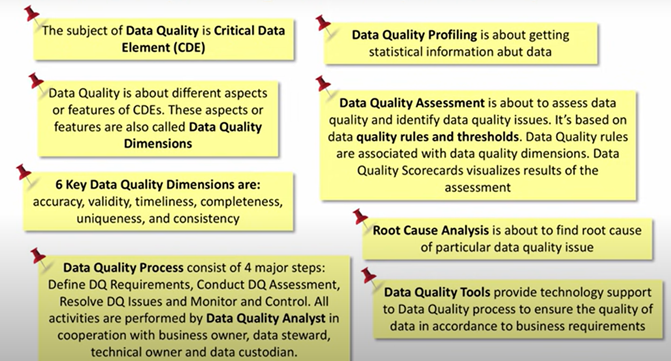

Data quality rules refer to business rules intended to ensure the quality of data in terms of accuracy, validity, timeliness, completeness uniqueness, and consistency.

NOTE:–

Data Quality Dimensions

Data quality Dimensions refer to aspects or features of information that can be assessed and used to determine the quality of data.

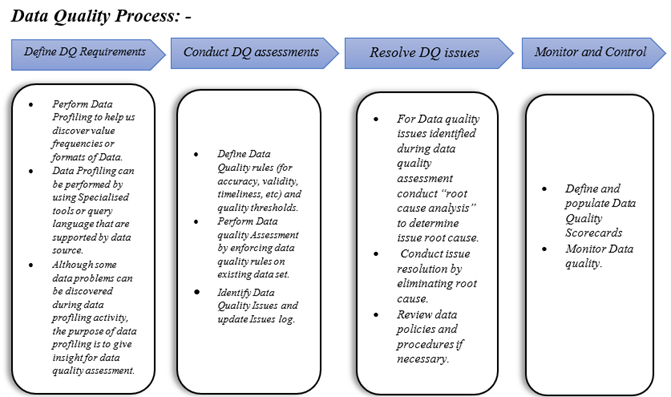

Data profiling is the process of reviewing source data, understanding structure, content, and interrelationships, and identifying potential for data projects.

Data Quality Process and System Development Life Cycle (SDLC)

Data Quality Process should be a part of the System Development Life Cycle (SDLC)

System Development Life Cycle (SDLC) refers to the process of, the process of planning, creating, testing, and deploying an information system.

Data quality team: roles and responsibilities

Data quality is one of the aspects of data governance that aims at managing data in a way to gains the greatest value from it. A senior executive who is in charge of data usage and governance on a company level is a Chief Data Officer (CDO). The CDO is the one who must gather a data quality team.

The number of roles in a data quality team depends on the company size and, consequently, on the amount of data it manages. Generally, specialists with both technical and business backgrounds work together in a data quality team. Possible roles include:

- Data owner – controls and manages the quality of a given dataset or several datasets, specifying data quality requirements. Data owners are generally senior executives representing the team’s business side.

- Data consumer – a regular data user who defines data standards, and reports on errors to the team members.

- Data producer – captures data ensuring that data complies with data consumers’ quality requirements.

- Data steward – usually in charge of data content, context, and associated business rules. The specialist ensures employees follow documented standards and guidelines for data and metadata generation, access, and use. Data steward can also advise on how to improve existing data governance practices and may share responsibilities with a data custodian.

- Data custodian – manages the technical environment of data maintenance and storage. The data custodian ensures the quality, integrity, and safety of data during ETL (extract, transform, and load) activities. Common job titles for data custodians are data modeler, database administrator (DBA), and ETL developer which you can read about in our article.

- Data analyst – explores, assesses, summarizes data, and reports on the results to stakeholders.

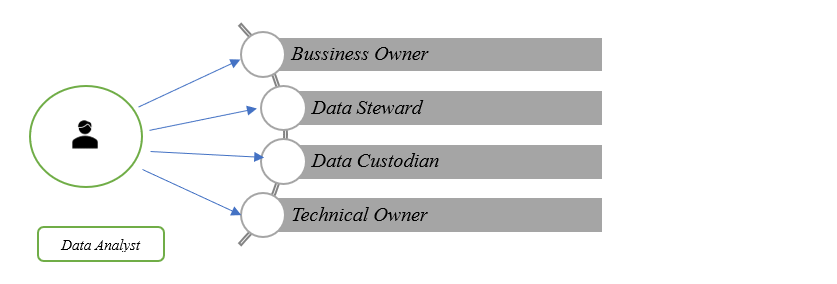

Since a Data Analyst is one of the key roles within the data quality teams, let’s break down this person’s profile.

Data Analyst closely works with the following roles.

Data quality analyst: a multitasker

The data quality analyst’s duties may vary. The specialist may perform the data consumer’s duties, such as data standard definition and documentation, and maintain the quality of data before it’s loaded into a data warehouse, which is usually the data custodian’s work.

According to the analysis of job postings by an associate professor at the University of Arkansas at Little Rock Elizabeth Pierce and job descriptions we found online, the data quality analyst responsibilities may include:

- Monitoring and reviewing the quality (accuracy, integrity) of data that users enter into company systems, data that are extracted, transformed, and loaded into a data warehouse.

- Identifying the root cause of data issues and solving them

- Measuring and reporting to management on data quality assessment results and ongoing data quality improvement

- Establishing and oversight of service level agreements, communication protocols with data suppliers, and data quality assurance policies and procedures

- Documenting the Return On Invest (ROI) of data quality activities.

Four Steps to Start Improving Our Data Quality

1. Discover

We can only plan our data quality journey once we understand our starting point. To do that, we will need to assess the current state of your data: what we have, where it resides, its sensitivity, data relationships, and any quality issues it has.

2. Define rules

The information we gather during the discovery phase shapes our decisions about the data quality measures we need and the rules we will create to achieve the desired end state. For example, we may need to cleanse and deduplicate data, standardize its format, or discard data from before a certain date. Note that this is a collaborative process between business and IT.

3. Apply rules

Once we’ve defined rules, we will integrate them into our data pipelines. Don’t get stuck in a silo; our data quality tools need to be integrated across all data sources and targets to remediate data quality across the entire organization.

4. Monitor and manage

Data quality is not a one-and-done exercise. To maintain it, we need to be able to monitor and report on all data quality processes continuously, on-premises and in the cloud, using dashboards, scorecards, and visualizations.

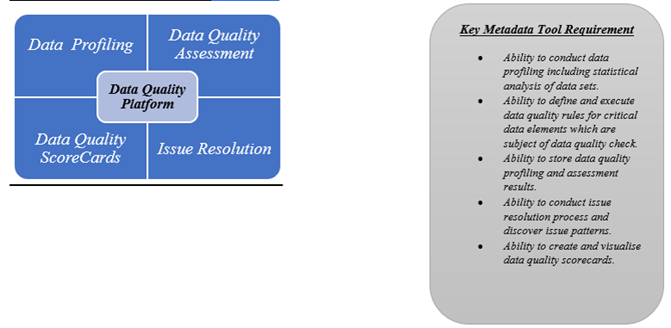

Data Quality Technological Support:

In a nutshell:

Conclusion

Quality of data is a complex concept, the definition of which is not straightforward. Here I have illustrated a basic definition for it.

There is a popular notion among experts that the data quality management strategy is the combination of people, processes, and tools. Once people can understand what makes high-quality data in their specific industry and organization, what measures must be taken to ensure data can be monetized, and what tools can supplement and automate these measures and activities, the initiative will bring desired business outcomes

Data quality dimensions serve as the reference point for constructing data quality rules, and metrics, defining data models and standards that all employees must follow from the moment they enter a record into a system or extract a dataset from third-party sources.

Ongoing monitoring, interpretation, and enhancement of data is another essential requirement that can turn reactive data quality management into a proactive one. Since everything goes in circles, it is the circle of managing high-quality, valuable data.

Author: Vivek Kumar