As the organizations are getting big the data that is being collected is huge and it’s increasing day by day and managing the quality of data is becoming more important. After all, data is the blood of any organization.

Data Quality Management – What, why, how, and best practices

Data Quality management helps by combining organizational culture, technology, and data to deliver accurate results, which helps businesses make smart decisions and also helps them create strategies. The goal is to achieve insights from the data using various processes and technologies from the increasingly bigger and more complex data.

How to define data quality: attributes, measures & metrics

Data Quality is the state of data which is tightly connected with its ability to solve business tasks. Data Quality is not good or bad, high or low. It’s a range, or measure, of the health of the data pumping through your organization. The following are the data attributes:

- Consistency: Uniform data elements across various platforms.

- Accuracy: Measures the degree to which data values are correct – and the ability to conclude out of it.

- Completeness: All data elements have tangible values.

- Auditability: Data is accessible and it’s possible to trace the introduced changes.

- Orderliness: Data should be in the correct format and structure.

- Uniqueness: Each element/record is represented once within a dataset, which helps in avoiding duplicates.

- Timeliness: Data should represent a reality within a specified period and it should follow corporate standards.

- Age: Addresses the fact that the data should be fresh and current, values should be up to date across the board.

Why low data quality is a problem

Poor Data Quality can cause significant damage to a business which leads to poor business decisions, poor customer relations, inaccurate analytics, etc.

There can be various sources of Poor Data Quality:

- Data integration issues: Conversion issues can happen like CSV to Excel spreadsheet conversion can lead to data loss.

- Data Capturing Inconsistencies: It’s transforming data from paper to computer so for eg., One may store data as Regal Enterprises, and another stores it as Regal Ent.

- Poor Data Migration: This is transferring data from legacy systems to the new database while doing this there are chances of data loss or data corruption.

- Data duplication: Duplicate data is there and it creates a problem to visualize the data.

Making data quality a priority:

According to recent research published by Gartner, organizations believe poor data quality is responsible for an average of $15 million per year in losses.

Two key factors should be considered in determining how to prioritize data quality issues:

- Level of impact:

- The level of impact can be driven by major 4 factors i.e., Financial Impact, Regulatory Impact, Operational Impact & Customer Impact.

- The level of Impact can be determined by the highest level in these categories but if the Financial Impact is high and others are high or low then also overall impact will be considered as high.

- Likelihood of occurrence:

- The likelihood of occurrence rule states that if the occurrence of the problem is more than 70% then it will fall into the high occurrence, if it is more than 30% then there are medium-level occurrences and if it is less than 30% then low chances of occurrence.

So, for making data quality a priority various steps should be considered:

- Designing an enterprise-wide data strategy.

- Creating clear user roles with rights and accountability.

- Setting up data quality management processes.

- Having a dashboard to monitor the status.

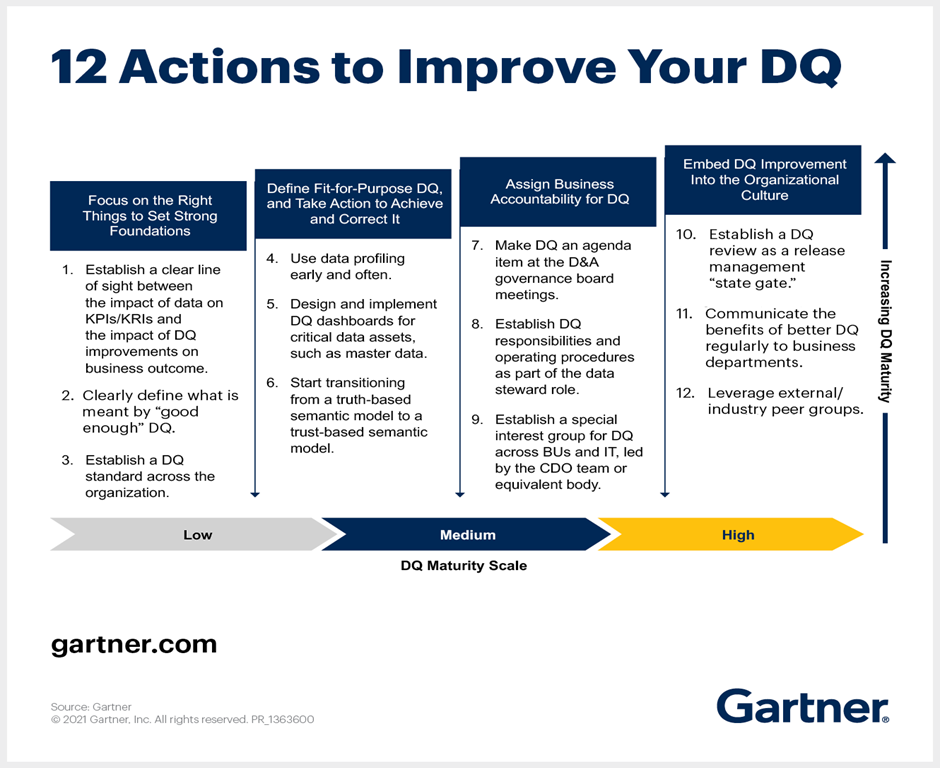

12 Actions to Improve Data Quality by Gartner:

Data Quality Management: Process Stages

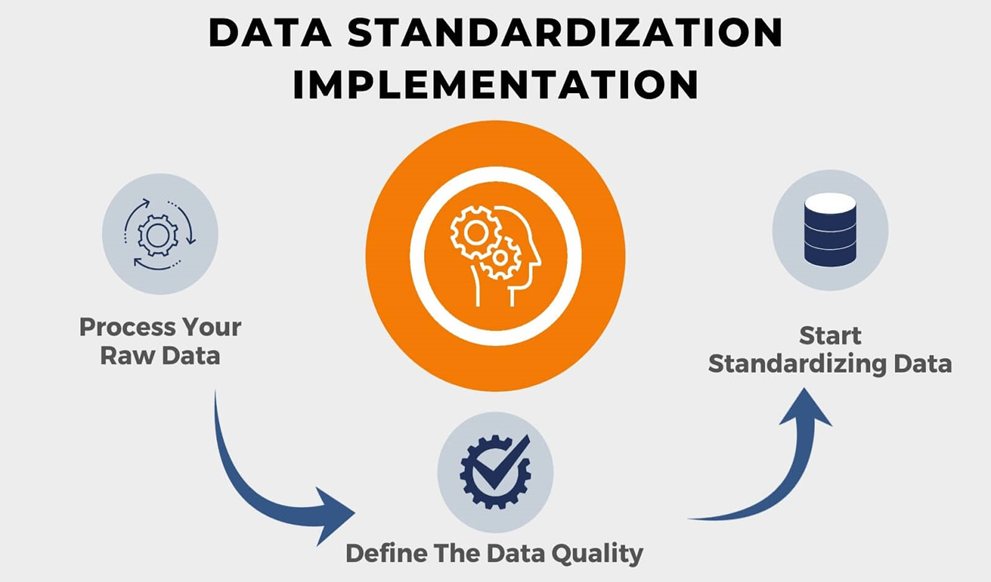

Definition & data quality rules:

Define the business goals for Data Quality improvement, data owners/stakeholders which impacts the business processes and data rules.

There is no shortcut or a direct way to get 100% quality data it can be achieved by accessing various scenarios. Firstly, 100% quality data is difficult to get so what companies do is find the critical data based on various scenarios and several data-quality attributes. Secondly, companies do not demand high-quality data they just require ‘good enough’ data for the analysis. Thirdly, if you need quality data then you need to set the thresholds. So, thresholds are just data quality rules.

Let’s take a practical example to understand the same:

Suppose you have set the rules like Customer Full Name should be the mandatory field including the phone number as a data quality check. So, the following data attributes must be set for quality data:

- The customer’s full name should not be null or NA(to check completeness).

- The customer’s full name must include at least 1 space (to check accuracy).

- Customer name first letters i.e., first name, middle name & last name must be capitalized (to check accuracy).

- There should be no figures only letters are allowed (to check accuracy).

- Phone numbers should not be greater than or less than 10 digits.

Assessment:

Now, let’s take an example to assess the data.

So, to measure the accuracy of the data we have the following rules:

- The customer’s full name should not be null or NA(to check completeness).

- The customer’s full name must include at least 1 space (to check accuracy).

- Customer name first letters i.e., first name, middle name & last name must be capitalized (to check accuracy).

- There should be no figures only letters are allowed (to check accuracy).

- Phone numbers should not be greater than or less than 10 digits.

Data accuracy check:

| Customer Full Name | Phone Number | … |

| Jane Brown | 987654321 | … |

| Marry Hayden | 4567891234 | … |

| Ste4e John | 1234567890 | … |

| David Henson | 9856781234 | … |

Analysis:

Analysis is the key to resolving the Data Quality issues. Analysis should be done from multiple fronts. One area of analysis is to analyze if there is any gap between business goals and current data.

Example: If customer addresses are incorrect then what is the root cause? Is there any problem with the field validations? Or the references through which data is entered is incorrect?

Resolve data issues/Improvement:

Design and development plans should be made based on the analysis performed. Plans should involve timeframe, cost, and the resources that will be required. In our previous example of the Customer’s full name, a clear standard should be provided if the manual entry is performed and quality-related key performance indicators for the employees responsible for keying data into the CRM Systems.

For the time being, data can be cleaned manually but for future data, clear standards and rules should be in place like the phone number should be 10 digits and no letters allowed.

Monitoring and controlling data:

Data quality is not a one-time effort rather it’s a non-stop process. You need to regularly monitor your data and keep on enhancing the rules and validation provided. With appropriate focus from the top, Data Quality Management can reap rich dividends for organizations.

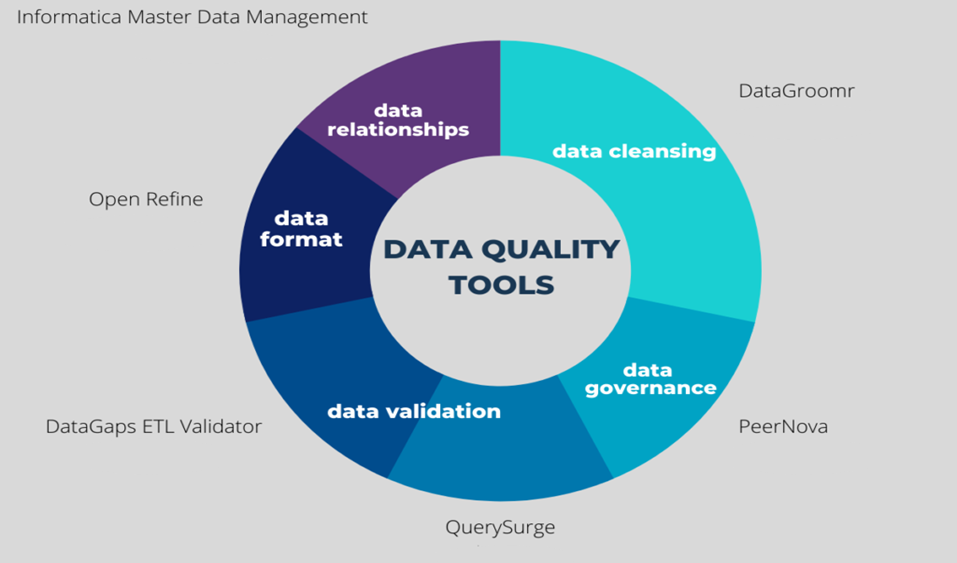

Categories of Data Quality Tools:

This tool breaks the data into components so it can be in a unified format.

This tool helps to remove duplicate records or requires modification of the values to meet certain rules and standards.

These tools help find, compare, and merge the data from different sources. It helps to improve data quality, accuracy, and completeness of the data.

It’s a process that analyses the data to understand the structure, content, relationships, and derivation rules. So, it is used to gain insights into the quality of the data by using various algorithms and evaluating the conditions.

It is used to track and analyze the data to assess the quality of the data stored within the system.

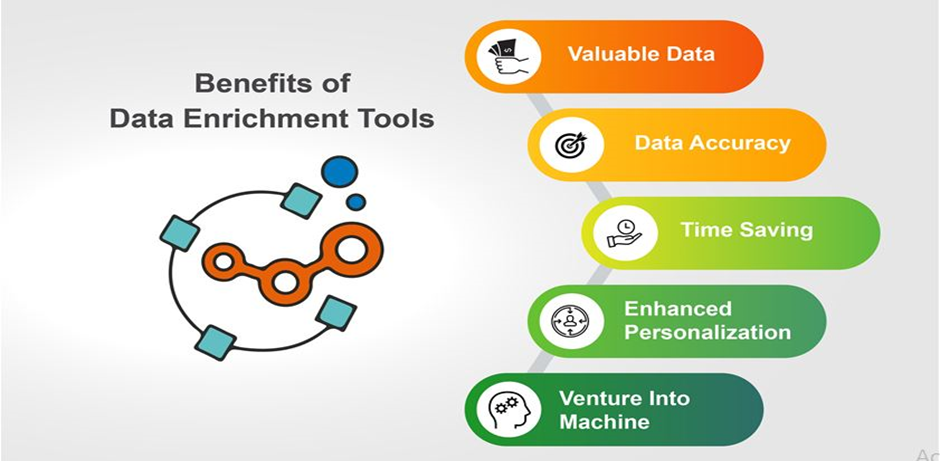

It is used to merge data from third-party tools which helps in detail level analysis.

Concluding Boundless Data Quality into a summarization:

Data Quality Management guards you from low-quality data that can discredit your data analytics efforts. However, to do Data Quality Management you should keep many aspects in mind. Choosing various metrics to assess the data quality, selecting the tools, and describing data quality rules and thresholds are just several steps.

Author: Fatema Rangwala